A Better Practices Guide to Using Claude Code

Table of Contents

Disclosure: This article uses affiliate links. Items I recommend may generate a small commission for me if you purchase them via my link.

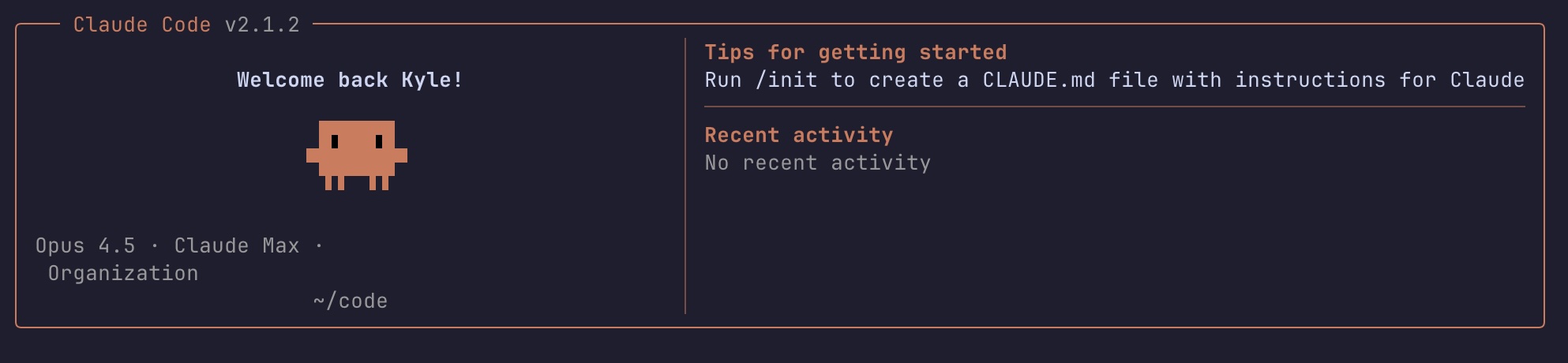

This is adapted from an internal document I built for a team I’m working on part-time. If some later sections feel AI-y that’s because I had Claude help fill them out. I normally don’t do this for writing I intend on publishing, but this was supposed to be a quick internal guidance document that turned into this deep dive. Even the few parts that AI fleshed out from many bullet points are useful, however, and I hope this serves as a solid reference guide to the many ways you can harness the power of Claude Code. And do yourself a favor: use Opus 4.5 (or later) by default.

Overview#

This document aims to teach some discovered “best” practices to get the most out of Claude Code. These practices and tools are changing rapidly, so expect this to go out of date at some point. I’ll do my best to keep this updated.. This is pretty long, so I’d recommend reading from the Introduction section through the Prompting Best Practices one, getting set up, and then reading the overviews of all the following sessions.

When you’re ready to incorporate the section into your workflow, read through the whole section and try out some of the workflows, techniques, or tools described.

Introduction#

Claude Code is a CLI LLM coding assistant built by Anthropic to speed up the development process for individual coders and teams. It can read your codebase, run shell commands, edit files, and iterate autonomously until a task is complete.

It is intentionally unopinionated and customizable. It won’t stop you from making bad decisions. Used well, it dramatically accelerates development. Used poorly, it can waste tokens, introduce bugs, or make changes you didn’t intend.

But What About Claude Desktop?#

Claude Code is extremely useful as an all-around agent and assistant, and for many it’s their primary way of interacting with Claude models, even outside of coding.

But Claude Code isn’t workable for all use cases. If you are doing research or even a simple search that requires lots of obscure context located in many different places and/or is information from after the training cutoff, Claude Desktop’s research mode is your ideal tool. The table below summarizes when to use which tool.

When to Use What#

| Tool | Best For | Key Strength |

|---|---|---|

| Claude Code CLI | Coding tasks, file manipulation, automation | Direct filesystem/terminal access |

| Claude Desktop | Research, writing, rich formatted output | Deep research mode, shareable artifacts |

| VS Code Extension | Claude Code with tighter IDE integration | Side-panel chat, better diff review |

| Claude Mobile | Quick questions on the go | Convenience |

Note: Claude Code can also run from Claude Desktop/Mobile via cloud containers, letting you work on repos without cloning locally.

Getting Started with Claude Code#

Installation#

- Requirements (Node 18+, supported OS)

npm install -g @anthropic-ai/claude-code- Verify:

claude --version

First Run#

- Run

claudefrom your project root (Claude Code uses your working directory as context) - Sign in (browser OAuth flow)

- Model selection: Opus (powerful, expensive) vs Sonnet (fast, cheaper)

- Default behavior: Opus until 50% usage, then Sonnet

- Override with

--model opusor--model sonnet

Understanding Permissions#

Claude asks before modifying files or running potentially destructive commands. This is intentional.

Ways to reduce interruptions:

| Method | Scope | Use When |

|---|---|---|

| “Always allow” at prompt | Current session | One-off trust |

| /permissions command | Persistent | Managing allowlists interactively |

| .claude/settings.json | Project | Team-wide project-based defaults (check into git) |

| ~/.claude.json | User | Personal preferences across projects |

Common allowlist patterns:

Edit— allow all file editsBash(git commit:*)— allow git commitsBash(npm test:*)— allow test runsmcp__<server>__<tool>— allow specific MCP tools

Avoid: --dangerously-skip-permissions outside of sandboxed environments.

Settings#

- Scope hierarchy: user (

~/.claude.json) → project (<PROJECT ROOT>/.claude/settings.json) - Full reference: https://code.claude.com/docs/en/settings

| Setting | Location | What It Does |

|---|---|---|

model |

CLI flag or settings | Default model (opus, sonnet, haiku) |

allowedTools |

.claude/settings.json |

Pre-approved tools/commands |

mcpServers |

.claude/settings.json |

MCP server configurations |

sandbox |

.claude/settings.json |

Enable container isolation |

Full settings reference: https://code.claude.com/docs/en/settings

Team Tip: Check .claude/settings.json into version control to share permissions and MCP configs across your team.

Sandboxing (Recommended for Autonomous Work)#

Running Claude Code in a container lets you safely enable auto-approve mode for long-running tasks.

- Use Docker or VS Code Dev Containers

- Once sandboxed,

--dangerously-skip-permissionsbecomes safe - Reference implementation: https://github.com/anthropics/claude-code/tree/main/.devcontainer

- Full guide: https://code.claude.com/docs/en/sandboxing

Recommended Tooling#

ghCLI — enables Claude to create PRs, manage issues, search git history- Install:

brew install gh/apt install gh - Authenticate

gh auth login - No additional configuration required

- Install:

- Optional: Run

/install-github-appto enable automatic PR reviews via @claude mentions. This installs a GitHub App on your repo that triggers Claude to review PRs when you mention @claude in the PR.

Need help with your data or AI initiative?

I work with teams on data engineering, AI/ML implementation, cloud architecture, and platform modernization. I also offer workshops and talks on these topics.

Workflow Patterns#

The PM Mindset#

The biggest mistake new users make is typing a task and expecting perfect output.

Claude Code works best when you treat it like a capable but context-blind junior developer. You’re the PM. Your job is to:

- Provide context before asking for work

- Break down ambiguous tasks into verifiable steps

- Review output before approving changes

- Course correct early rather than letting Claude go down wrong paths

A well-scoped task with clear success criteria will save you more time than a vague prompt that requires three do-overs.

Core Workflow: Explore → Plan → Code → Commit#

This is the canonical pattern for most non-trivial tasks.

Phase 1: Explore and Plan (Plan Mode)#

Enter Plan Mode to prevent Claude from making changes while it researches.

| Action | Method |

|---|---|

| Toggle Plan Mode | Shift+Tab or /plan |

| Visual indicator | You’ll see “Plan Mode” in the interface |

In Plan Mode, Claude:

- Reads files and explores the codebase

- Uses the built-in

Plansubagent for research - Proposes approaches and identifies edge cases

- Cannot edit files or run mutating commands

Example session:

[Plan Mode ON]

You: I need to add OAuth support to our auth module.

Explore the current implementation and propose a plan.

Claude: [Reads auth files, checks dependencies, reviews patterns]

Here's what I found...

Here's my proposed approach...

You: Think harder about the token refresh edge case.

Claude: [Extended reasoning]

Updated plan with refresh handling...

You: Write this plan to docs/OAUTH_PLAN.md

Claude: [Writes plan file — allowed because it's additive documentation]

Why write the plan to a file? If implementation goes wrong, you can /clear, reload the plan file, and try again without re-doing the research phase.

Phase 2: Implement (Exit Plan Mode)#

Once the plan looks right, toggle back to normal mode.

[Shift+Tab to exit Plan Mode]

You: Implement the plan in docs/OAUTH_PLAN.md step by step.

After each step, verify it works before continuing.

Write tests as you go.

Now Claude can edit files and run commands. The plan file serves as the spec.

Phase 3: Commit and Close#

You: Commit these changes with a descriptive message.

Then create a PR summarizing what we did.

If you have gh installed, Claude handles the full PR flow.

Quick Reference#

| Phase | Mode | Claude Can | Prompt Pattern |

|---|---|---|---|

| Explore | Plan Mode | Read, search, reason | “Explore X. Don’t write code yet.” |

| Plan | Plan Mode | Propose, document | “Think hard. Write plan to file.” |

| Implement | Normal | Edit, run, test | “Implement step by step. Verify each step.” |

| Commit | Normal | Git operations | “Commit and create PR.” |

Variant: TDD Loop#

For changes with clear correctness criteria, let tests drive the iteration.

- Write tests first: “Write tests for the new rate limiter. Cover the happy path and the edge case where the user exceeds the limit. Don’t implement the feature yet.”

- Confirm tests fail: “Run the tests and confirm they fail.”

- Implement: “Now implement the rate limiter to pass the tests. Don’t modify the tests.”

- Iterate: Claude runs tests, fixes failures, repeats until green.

- Commit: “Commit the implementation.”

This works because Claude has a clear target to iterate against. The tests are the spec.

Variant: Visual Iteration (Frontend)#

For UI work, give Claude a visual target.

- Provide a mock: Paste a screenshot, drag-drop an image, or give a file path

- Implement: “Build this component to match the mock.”

- Screenshot result: Use Puppeteer MCP or paste a screenshot manually

- Compare and iterate: “Here’s what it looks like now. Adjust to match the original more closely.”

- Repeat until satisfied

Claude’s first attempt is usually 70% there. Two or three iterations get you to 95%.

Technique: Codebase Q&A#

Use Claude Code to learn unfamiliar codebases. No special prompting is required, just ask questions:

- “How does logging work in this project?”

- “What’s the data flow from API request to database write?”

- “Why does

CustomerServiceextendBaseServiceinstead of implementing an interface?” - “Find all the places where we handle auth token refresh.”

This replaces hours of grep and documentation diving. It’s particularly useful for onboarding new team members.

Technique: Git and GitHub Operations#

Claude with gh installed can handle most git workflows:

| Task | Example Prompt |

|---|---|

| Search history | “Who last modified the payment module and why?” |

| Write commits | “Commit these changes with a descriptive message” |

| Create PRs | “Create a PR for this branch with a summary of changes” |

| Resolve conflicts | “Help me resolve this merge conflict” |

| Review PRs | “Review the open PR and summarize the changes” |

Tip: Explicitly prompt Claude to check git history when asking “why” questions. “Look through git history to understand why this API was designed this way.”

Prompting Best Practices#

The Core Principle: Encode Intent Upfront#

The biggest prompting mistake isn’t poor phrasing — it’s correcting Claude after it writes bad code instead of preventing bad code in the first place.

Claude has no knowledge of your team’s conventions, architectural decisions, or hard-won lessons. By default, it generates whatever patterns it saw most often in training data. The fix: encode your standards into CLAUDE.md or Skills so every prompt starts with your context.

Example: Dagster’s team codified their Python philosophy into rules they load into every Claude session. Instead of reviewing and rewriting LLM output, they get code that matches their standards on the first pass. See: Dignified Python: 10 Rules to Improve Your LLM Agents

What belongs in your team’s rules (see the CLAUDE.md section for an example CLAUDE.md file):

- Language idioms (e.g., “prefer LBYL over EAFP,” “never swallow exceptions”)

- Architectural patterns (e.g., “use repository pattern for data access”)

- Naming conventions (e.g., “use snake_case, prefix private methods with _”)

- Testing philosophy (e.g., “no mocks for unit tests,” “integration tests hit real DB”)

- Error handling (e.g., “let exceptions bubble up unless at an API boundary”)

Specificity: Vague vs. Precise Prompts#

Claude can infer intent, but it can’t read minds. Specific prompts reduce iterations.

| Vague | Precise |

|---|---|

| “Add tests for foo.py” | “Write a test case for foo.py covering the edge case where the user is logged out. Don’t use mocks.” |

| “Why is this API weird?” | “Look through git history to find when ExecutionFactory was introduced and summarize why its API evolved this way.” |

| “Add a calendar widget” | “Look at HotDogWidget.php to understand our widget patterns. Build a calendar widget that lets users select a month and paginate years. No external libraries beyond what we already use.” |

Patterns that improve specificity:

- Name the files, functions, or modules involved

- State what not to do (no mocks, don’t modify tests, don’t use library X)

- Reference existing code as a pattern to follow

- Define the success criteria explicitly

Thinking Triggers#

Use these phrases to allocate more reasoning time during planning:

| Phrase | Budget | Use When |

|---|---|---|

think |

Standard | Default exploration |

think hard |

Extended | Multiple valid approaches |

think harder |

More extended | Complex tradeoffs, edge cases |

ultrathink |

Maximum | Architecture decisions, subtle bugs |

These are mapped to actual token allocations in Claude Code, not just suggestions.

Example:

Think harder about how token refresh should work when the user

has multiple tabs open. What race conditions could occur?

Providing Context#

Claude works better with more context. Use these methods:

| Method | How | Best For |

|---|---|---|

| File references | Tab-complete paths in prompt | Pointing to specific code |

| Images | Paste (Ctrl+V), drag-drop, or file path | UI mocks, diagrams, error screenshots |

| URLs | Include URL in prompt | Documentation, Stack Overflow, specs |

| Piped data | cat log.txt | claude |

Logs, CSVs, large text files |

| @ mentions | @filename.py |

Quick file references |

Tip: On macOS, Cmd+Ctrl+Shift+4 screenshots to clipboard, then Ctrl+V (not Cmd+V) to paste into Claude Code.

Course Correction#

Don’t wait for Claude to finish if it’s heading the wrong direction.

| Action | Shortcut | When to Use |

|---|---|---|

| Interrupt | Escape |

Claude is going off-track |

| Rewind | Escape twice |

Try a different approach from earlier |

| Reset context | /clear |

Starting a new task; old context is noise |

| Checkpoint | “Write this to PLAN.md” | Before risky implementation work |

The /clear habit: Use it liberally between tasks. Accumulated context from previous work can confuse Claude and waste tokens.

Checklists for Complex Tasks#

For large tasks (migrations, bulk refactors), have Claude maintain external state:

1. Run the linter and write all errors to TODO.md as a checkbox list.

2. Fix each item one at a time. Check off each item after fixing.

3. After each fix, run the linter again to confirm it's resolved.

This prevents drift, gives you visibility, and lets Claude track progress across a long session.

Output Styles#

You can set output styles with /output-style, these are the available styles currently:

- Default - Focused on efficiency and short responses.

- Explanatory - Explains choices Claude takes and patterns in the codebase. This is a good default to balance speed and understanding.

- Learning - A more pedagogical approach where Claude will ask you to write some code for practice. Useful to learn patterns in a codebase or a new language.

Anti-Patterns to Avoid#

| Anti-Pattern | Why It Fails | Instead |

|---|---|---|

| “Make it better” | No success criteria | “Reduce latency by caching X” |

| Multi-part prompts | Claude loses track | One task per prompt, then follow-up |

| Assuming context | Claude doesn’t know your codebase | Reference files explicitly |

| Correcting after | Slower than preventing | Encode standards in CLAUDE.md |

| Never interrupting | Wasted tokens, wrong direction | Escape early, redirect |

Memory with CLAUDE.md#

CLAUDE.md is a special file that Claude automatically loads into context at the start of every session. Think of it as onboarding documentation for Claude, it holds the things a new team member would need to know to contribute effectively.

Unlike Skills (which are invoked on-demand) or subagents (which run in isolated contexts), CLAUDE.md is always present. This makes it ideal for:

- Project-wide conventions that apply to every task

- Commands Claude should know how to run

- Gotchas and warnings specific to your codebase

- Style guidelines and architectural decisions

Where It Lives#

Claude Code looks for CLAUDE.md files in multiple locations, loading them in order of specificity:

| Location | Scope | Use For |

|---|---|---|

~/.claude/CLAUDE.md |

All sessions | Personal preferences, global shortcuts |

Project root /CLAUDE.md |

This project | Team conventions, shared with git |

| Parent directories | Monorepo | Shared config across subprojects |

| Child directories | Submodules | Module-specific overrides |

CLAUDE.local.md |

Personal, not committed | Your preferences for this project |

Resolution order: Global → parent → project root → child directories. More specific files add to (not replace) broader ones.

Tip for monorepos: Put shared conventions in root/CLAUDE.md and module-specific instructions in root/packages/foo/CLAUDE.md. Claude loads both when you run from root/packages/foo/.

Getting Started: /init#

The fastest way to create a CLAUDE.md is to let Claude generate one:

/init

Claude will analyze your project structure, detect frameworks and tools, and generate a starter CLAUDE.md. Review and edit the result — it’s a starting point, not gospel.

What to Include#

A good CLAUDE.md is concise and actionable. Aim for instructions Claude can follow, not documentation for humans.

Recommended sections:

# Project: [Name]

## Commands

- `npm run build` — Build the project

- `npm run test` — Run tests (use `npm run test -- --watch` for TDD)

- `npm run lint` — Lint and auto-fix

## Code Style

- Use ES modules (import/export), not CommonJS (require)

- Prefer named exports over default exports

- Use TypeScript strict mode; no `any` without justification

## Architecture

- API routes live in `src/routes/`; follow existing patterns

- Database access goes through repositories in `src/repos/`

- Never import from `src/internal/` outside that directory

## Testing

- Unit tests use Vitest; no mocks except for external services

- Integration tests hit a real test database

- Run single test files during development: `npm run test -- path/to/file`

## Warnings

- The `legacy/` directory is deprecated; don't add new code there

- `CustomerService` has known race conditions; see issue #1234

Keep it short. If your CLAUDE.md exceeds ~100 lines, consider moving detailed reference material to Skills to take advantage of progressive disclosure.

Tuning Your CLAUDE.md#

CLAUDE.md is just a prompt. Treat it like one.

Techniques that improve adherence:

- Use emphasis for critical rules:

IMPORTANT:orYOU MUST - Be specific: “Use snake_case” not “Follow naming conventions”

- Include examples of correct patterns

- Run it through Anthropic’s prompt improver periodically

Iterate based on results. If Claude keeps making the same mistake, add a rule. If a rule isn’t being followed, rephrase it with more emphasis or examples.

Adding Instructions During Sessions#

Press # during any session to add an instruction to CLAUDE.md without leaving the conversation:

# Always run tests after making changes to src/auth/

Claude will add this to the appropriate CLAUDE.md file. This is useful for capturing lessons as you discover them.

Team Sharing and Governance#

Personal CLAUDE.md (~/.claude/CLAUDE.md): Configure however you like. Your shortcuts, your preferences.

Project CLAUDE.md: Treat like code.

| Practice | Why |

|---|---|

| Check into git | Everyone gets the same Claude behavior |

| Require code review for changes | Conventions affect the whole team |

| Keep a CLAUDE.local.md for personal overrides | Don’t pollute shared config with individual preferences |

| Document why, not just what | Future teammates need context |

Suggested workflow:

- Start with

/initto generate a baseline - Review as a team; merge to main

- Add rules incrementally via

#or PRs - Periodically audit: are rules being followed? Are any obsolete?

A 500-line CLAUDE.md that nobody maintains is a common anti-pattern. Start small, iterate based on actual problems.

Starter Template#

Copy this as a starting point for new projects:

# Project: [Your Project Name]

## Quick Start

- `[build command]` — Build the project

- `[test command]` — Run tests

- `[lint command]` — Lint code

## Code Style

- [Your language] version: [version]

- [2-3 key style rules]

## Architecture

- [Where key code lives]

- [Key patterns to follow]

## Testing

- [Testing framework and philosophy]

- [How to run single tests]

## Warnings

- [Known gotchas, deprecated areas, sharp edges]

## Workflow

- [Branch naming, commit message format, PR process]

CLAUDE.md vs. Skills vs. Subagents#

| Mechanism | Loaded | Scope | Use For |

|---|---|---|---|

| CLAUDE.md | Always | Every task | Universal project conventions |

| Skills | On-demand | Matching tasks | Specialized workflows (PDF, testing, etc.) |

| Subagents | When invoked | Isolated context | Delegated subtasks (review, research) |

If you find yourself adding task-specific instructions to CLAUDE.md, consider creating a Skill instead.

Importing Other Files#

For larger projects, you can split conventions across multiple files and import them into CLAUDE.md:

# Project: MyApp

## Core Conventions

@docs/conventions/code-style.md

@docs/conventions/testing.md

@docs/conventions/api-patterns.md

## Module-Specific

@src/payments/CONVENTIONS.md

@src/auth/CONVENTIONS.md

How it works:

- Add

@path/to/file.mdon its own line - Claude loads the referenced file into context at session start

- Paths are relative to the CLAUDE.md file’s location

When to use imports:

| Scenario | Approach |

|---|---|

| Small project (<100 lines of conventions) | Single CLAUDE.md |

| Large monorepo with shared + module-specific rules | Root CLAUDE.md imports shared files; module CLAUDE.md files import module-specific |

| Team with existing style guides | Import existing docs rather than duplicating |

| Conventions that change frequently | Separate file = smaller PR diffs |

Example structure for a monorepo:

├── CLAUDE.md # Imports shared conventions

├── docs/

│ └── conventions/

│ ├── typescript.md # TS-specific rules

│ ├── testing.md # Testing philosophy

│ └── api-design.md # API patterns

├── packages/

│ ├── api/

│ │ └── CLAUDE.md # Imports root + api-specific

│ └── web/

│ └── CLAUDE.md # Imports root + web-specific

Root CLAUDE.md:

# Monorepo Conventions

@docs/conventions/typescript.md

@docs/conventions/testing.md

packages/api/CLAUDE.md:

# API Package

@../../docs/conventions/api-design.md

## API-Specific Commands

- `npm run dev` — Start dev server on :3000

- `npm run db:migrate` — Run pending migrations

Imported files don’t need YAML frontmatter — they’re just markdown that gets concatenated into context. Keep them focused on instructions, not documentation.

Custom Slash Commands#

Slash commands are reusable prompt templates stored as markdown files. When you type / in Claude Code, your custom commands appear alongside built-in ones.

Use slash commands for:

- Repeated workflows you run frequently (debug loops, PR creation, code review)

- Team-standardized processes (everyone runs the same deployment checklist)

- Complex prompts you don’t want to retype (multi-step tasks with specific instructions)

Think of them as saved prompts with optional parameters.

Where They Live#

| Location | Scope | Invoked As |

|---|---|---|

.claude/commands/ |

Project (check into git) | /project:command-name |

~/.claude/commands/ |

Personal (all projects) | /user:command-name |

File naming: The filename becomes the command name. fix-issue.md becomes /project:fix-issue.

Basic Structure#

A slash command is a markdown file with optional YAML frontmatter:

---

description: One-line description shown in the / menu

---

Your prompt template goes here.

This can be multiple paragraphs with full markdown formatting.

The description field is optional but recommended — it helps you (and teammates) understand what the command does when browsing the / menu.

Parameterization with $ARGUMENTS#

Use $ARGUMENTS to pass values from the command invocation into your template:

File: .claude/commands/fix-issue.md

---

description: Analyze and fix a GitHub issue by number

---

Analyze and fix GitHub issue #$ARGUMENTS.

1. Use `gh issue view $ARGUMENTS` to get issue details

2. Search the codebase for relevant files

3. Implement the fix

4. Write tests to verify

5. Commit with message "fix: resolve #$ARGUMENTS"

6. Create a PR linking to the issue

Invocation:

/project:fix-issue 1234

Claude sees the fully substituted prompt with 1234 replacing $ARGUMENTS.

User vs. Project Commands#

| Aspect | User Commands | Project Commands |

|---|---|---|

| Location | ~/.claude/commands/ |

.claude/commands/ |

| Shared with team | No | Yes (via git) |

| Prefix | /user: |

/project: |

| Use for | Personal shortcuts | Team workflows |

Recommendation: Start with project commands. They’re version-controlled, reviewed, and shared. Use user commands only for personal preferences that wouldn’t benefit the team.

Examples#

GitHub Issue Fixer#

.claude/commands/fix-issue.md

---

description: Analyze and fix a GitHub issue

---

Analyze and fix GitHub issue #$ARGUMENTS.

Follow these steps:

1. Run `gh issue view $ARGUMENTS` to get details

2. Understand the problem described

3. Search the codebase for relevant files

4. Implement the fix

5. Write tests to verify the fix

6. Ensure code passes linting and type checking

7. Commit with a descriptive message referencing #$ARGUMENTS

8. Push and create a PR

Use `gh` for all GitHub operations.

Quick Code Review#

.claude/commands/review.md

---

description: Review staged changes before committing

---

Review my staged changes:

1. Run `git diff --staged`

2. Check for:

- Logic errors or bugs

- Missing error handling

- Security concerns

- Violations of our code style (see CLAUDE.md)

3. Suggest improvements

4. If changes look good, draft a commit message

Do not commit yet — just review and report.

Test Coverage Check#

.claude/commands/coverage.md

---

description: Identify untested code paths

---

Analyze test coverage for $ARGUMENTS:

1. Read the source file

2. Identify all public functions and branches

3. Read the corresponding test file

4. List which functions/branches lack test coverage

5. Suggest specific test cases to add

Focus on edge cases and error paths.

Start of Day#

~/.claude/commands/standup.md (user command)

---

description: Morning context refresh

---

Help me get oriented for today:

1. Run `git log --oneline -10` to see recent commits

2. Run `gh pr list --author @me` to see my open PRs

3. Run `gh issue list --assignee @me` to see my assigned issues

4. Summarize what I was working on and what needs attention

Slash Commands vs. Skills#

| Aspect | Slash Commands | Skills |

|---|---|---|

| Invocation | Explicit (/project:name) |

Automatic (Claude matches based on task) |

| Structure | Single markdown file | Directory with SKILL.md + supporting files |

| Parameters | $ARGUMENTS |

None (context comes from the task) |

| Use for | Explicit, repeatable workflows | Domain expertise Claude applies automatically |

If you want Claude to always apply certain knowledge when relevant, use a Skill. If you want to explicitly trigger a specific workflow, use a slash command.

Orchestrating Subagents from Commands#

Slash commands can explicitly invoke subagents, combining repeatable workflows with specialized expertise:

File: .claude/commands/review-and-fix.md

---

description: Review code with specialist, then fix issues

---

Review and fix $ARGUMENTS:

1. Spin up the code-reviewer subagent to analyze the file

2. Collect its findings

3. For each issue found:

- Fix the issue

- Verify the fix doesn't break tests

4. Summarize what was changed

Use the security-auditor subagent if any auth-related code is modified.

This pattern lets you:

- Define the workflow in the slash command

- Delegate expertise to purpose-built subagents

- Keep the main context clean (subagents run in isolated contexts)

See the Subagents section for how to create and configure subagents.

Tips#

- Keep commands focused. One workflow per command. Chain commands manually if needed.

- Use

$ARGUMENTSliberally. Commands without parameters are just saved prompts, meaning they’re less flexible. - Include escape hatches. Add “If X isn’t clear, ask me before proceeding” to avoid runaway automation.

- Version control project commands. Treat them like code: review changes, document purpose.

Agent Skills#

My personal experience working with Agent Skills here.

What They Are#

Skills are folders containing instructions, scripts, and resources that Claude loads dynamically when relevant to your task. Unlike CLAUDE.md (always loaded) or slash commands (explicitly invoked), Skills are automatically activated when Claude determines they’re relevant based on the task.

Think of Skills as onboarding guides for specialized tasks: everything Claude needs to know to handle a specific domain well.

Where They Live#

| Location | Scope |

|---|---|

.claude/skills/ |

Project (check into git) |

~/.claude/skills/ |

Personal (all projects) |

Each Skill is a directory containing at minimum a SKILL.md file:

.claude/skills/

└── api-testing/

├── SKILL.md # Required: instructions + metadata

├── examples.md # Optional: loaded on demand

├── patterns.md # Optional: loaded on demand

└── scripts/

└── generate-mock.py # Optional: executable by Claude

How Skills Are Loaded (Progressive Disclosure)#

Skills use a three-tier loading system to conserve context:

| Tier | What Loads | When |

|---|---|---|

| Metadata | name and description from frontmatter |

Session start (all skills) |

| Instructions | Body of SKILL.md | When Claude matches the skill to your task |

| Supporting files | Other .md files, scripts | When Claude determines they’re needed |

This means you can install many Skills without context penalty — Claude only knows each exists until it needs one.

Implication for authoring: Put essential instructions in SKILL.md. Put detailed reference material in separate files that Claude reads only when needed.

SKILL.md Structure#

---

name: api-testing

description: >

Test REST APIs with comprehensive coverage. Use when writing API tests,

testing endpoints, or debugging HTTP issues. Handles authentication,

pagination, and error scenarios.

allowed-tools: Read, Write, Edit, Bash, WebFetch

---

# API Testing

## When to Use This Skill

- Writing tests for REST endpoints

- Debugging failing API calls

- Generating mock responses

## Approach

1. Start by reading the endpoint implementation

2. Identify all response codes and edge cases

3. Write tests covering happy path first, then errors

4. Use actual HTTP calls in integration tests, not mocks

## Patterns

See [patterns.md](./patterns.md) for common testing patterns.

## Scripts

Run `./scripts/generate-mock.py <endpoint>` to scaffold a mock response file.

Frontmatter fields:

| Field | Required | Description |

|---|---|---|

name |

Yes | Identifier for the skill |

description |

Yes | How Claude decides to use this skill — be specific |

allowed-tools |

No | Restrict which tools the skill can use |

context |

No | Set to fork to run in isolated subagent context |

Writing Good Descriptions#

The description field determines whether Claude activates your Skill. Vague descriptions won’t match; specific ones will.

| Bad | Good |

|---|---|

| “Helps with documents” | “Extract text and tables from PDF files, fill forms, merge documents. Use when working with PDFs or when user mentions forms or document extraction.” |

| “Testing utilities” | “Write and debug Playwright end-to-end tests. Use when creating browser tests, debugging selectors, or handling authentication flows in E2E tests.” |

Include in your description:

- Specific actions the skill enables

- Trigger words users would say

- File types or technologies involved

Pre-Built Skills#

Anthropic provides Skills for common document tasks, available to all paid plans:

| Skill | What It Does |

|---|---|

| Extract text/tables, fill forms, merge/split documents | |

| DOCX | Create and edit Word documents with formatting |

| PPTX | Create presentations with layouts and speaker notes |

| XLSX | Create spreadsheets with formulas, charts, data analysis |

These are automatically available — no installation needed. Claude invokes them when you work with these file types.

Creating Custom Skills#

Option 1: Use the skill-creator skill

Help me create a skill for reviewing database migrations.

Claude will interactively ask about your workflow, then generate the folder structure and SKILL.md.

Option 2: Create manually

- Create the directory:

mkdir -p .claude/skills/db-migrations

- Create SKILL.md with frontmatter:

---

name: db-migrations

description: >

Review and create database migrations. Use when working with

schema changes, migration files, or database versioning.

---

# Database Migration Review

## Checklist

- [ ] Migration is reversible (has down method)

- [ ] No data loss in down migration

- [ ] Indexes added for new foreign keys

- [ ] Large tables use batched updates

## Commands

- `npm run db:migrate` — Run pending migrations

- `npm run db:rollback` — Revert last migration

- `npm run db:status` — Show migration status

- (Optional) Add supporting files for detailed reference material

Tip: Keep SKILL.md under 500 lines. If you exceed this, split detailed content into separate files and link to them.

Skills with Executable Scripts#

Skills can include scripts that Claude runs as tools:

.claude/skills/data-analysis/

├── SKILL.md

└── scripts/

├── profile-csv.py # Claude can execute this

└── detect-outliers.py

In your SKILL.md:

## Available Scripts

- `./scripts/profile-csv.py <file>` — Generate column statistics and type inference

- `./scripts/detect-outliers.py <file> <column>` — Find statistical outliers

Run these scripts to analyze data before writing transformation code.

Claude will execute these scripts when appropriate, using the output to inform its work.

Skills vs. CLAUDE.md vs. Slash Commands#

| Aspect | CLAUDE.md | Slash Commands | Skills |

|---|---|---|---|

| Loaded | Always | When invoked (/command) |

When Claude matches task |

| Invocation | Automatic | Explicit | Automatic |

| Structure | Single file | Single file | Directory with files |

| Parameters | None | $ARGUMENTS |

None (context from task) |

| Best for | Universal project conventions | Explicit, repeatable workflows | Domain expertise applied automatically |

| Example | “Use snake_case everywhere” | /fix-issue 1234 |

“PDF form filling” |

Decision guide:

- Rules that apply to every task → CLAUDE.md

- Workflows you trigger explicitly → Slash Command

- Expertise Claude should apply when relevant → Skill

Tips#

- Start with pre-built skills. See how Anthropic structures them before creating your own.

- Be specific in descriptions. Claude can’t use a skill it doesn’t know to match.

- Use progressive disclosure. Don’t put everything in SKILL.md — link to supporting files.

- Test activation. Ask Claude “what skills are available?” and try tasks that should trigger your skill.

- Version control project skills. They’re code: review and iterate.

Subagents#

Subagents are specialized Claude instances that run in isolated context windows. When Claude delegates to a subagent, the subagent works independently and returns only its findings — not its full context — to the main conversation.

Why this matters:

- Context isolation: Subagent exploration doesn’t bloat your main conversation

- Specialization: Each subagent has tailored instructions for its domain

- Tool restrictions: Subagents can be limited to read-only operations or specific tools

- Model flexibility: Use cheaper models (Haiku) for simple tasks, powerful models (Opus) for complex ones

Think of subagents as specialists you call in for specific jobs. The generalist (main Claude) coordinates while the specialists execute.

Where They Live#

| Location | Scope |

|---|---|

.claude/agents/ |

Project (check into git) |

~/.claude/agents/ |

Personal (all projects) |

Each subagent is a single markdown file:

.claude/agents/

├── code-reviewer.md

├── security-auditor.md

└── test-writer.md

Built-In Subagents#

Claude Code includes two subagents you don’t need to create:

Plan Subagent#

Used automatically in Plan Mode (Shift+Tab or /plan).

- Purpose: Research and gather context before proposing a plan

- Behavior: Explores codebase, reads files, analyzes patterns

- Restrictions: Cannot edit files or run mutating commands

- Why it exists: Prevents infinite nesting (subagents can’t spawn subagents)

When you’re in Plan Mode and ask Claude to understand something, it delegates research to the Plan subagent, keeping your main context clean.

Explore Subagent#

A fast, lightweight agent for codebase searches.

- Purpose: Rapid file discovery and code analysis

- Tools: Read-only only —

ls,cat,find,git log,git diff,grep - Thoroughness levels: Claude specifies quick, standard, or thorough based on the task

Claude uses Explore when it needs to search or understand code but doesn’t need to make changes.

Creating Custom Subagents#

Option 1: Interactive creation with /agents

/agents

This opens an interactive interface where you can:

- View existing subagents

- Create new subagents with Claude’s help

- Edit subagent configuration

- See all available tools (including MCP tools) for the

toolsfield

Option 2: Create manually

Create a markdown file in .claude/agents/ with this structure:

---

name: code-reviewer

description: >

Reviews code for bugs, style violations, and security issues.

Use for PR reviews, pre-commit checks, or when asked to review changes.

tools: Read, Grep, Glob, Bash(git diff:*), Bash(git log:*)

model: sonnet

---

# Code Reviewer

You are a senior engineer reviewing code changes.

## Review Checklist

- Logic errors and potential bugs

- Error handling gaps

- Security concerns (injection, auth, data exposure)

- Performance issues (N+1 queries, unnecessary computation)

- Style violations per project conventions

- Test coverage gaps

## Output Format

Organize findings by severity:

1. **Blocking:** Must fix before merge

2. **Should Fix:** Important but not blocking

3. **Suggestions:** Nice-to-haves and nitpicks

Be specific. Reference line numbers. Suggest fixes.

Frontmatter Configuration#

| Field | Required | Default | Description |

|---|---|---|---|

name |

Yes | — | Identifier for the subagent |

description |

Yes | — | When Claude should use this subagent |

tools |

No | Inherit all | Comma-separated list of allowed tools |

model |

No | sonnet |

opus, sonnet, haiku, or inherit |

permissionMode |

No | default |

Permission handling for the subagent |

skills |

No | None | Skills to auto-load for this subagent |

hooks |

No | None | Lifecycle hooks (PreToolUse, PostToolUse, Stop) |

Model Selection#

| Value | When to Use |

|---|---|

opus |

Complex analysis, architectural decisions |

sonnet |

General-purpose tasks (default) |

haiku |

Simple, fast tasks (linting, formatting checks) |

inherit |

Match the main conversation’s model |

Tool Restrictions#

Restrict tools based on the subagent’s purpose:

| Subagent Type | Recommended Tools |

|---|---|

| Reviewers, auditors | Read, Grep, Glob (read-only) |

| Researchers | Read, Grep, Glob, WebFetch, WebSearch |

| Code writers | Read, Write, Edit, Bash, Glob, Grep |

| Documentation | Read, Write, Edit, Glob, Grep, WebFetch |

MCP tools: Subagents can access MCP tools from configured servers. Use the format mcp__servername__toolname. When tools is omitted, subagents inherit all tools including MCP tools.

When Claude Auto-Delegates vs. Explicit Invocation#

Auto-delegation: Claude reads your subagent descriptions and decides when to use them:

You: Review my recent changes for security issues.

Claude: [Internally delegates to security-auditor subagent]

The security-auditor found three issues...

Explicit invocation: You can request a specific subagent:

You: Use the code-reviewer subagent to check src/auth/*.py

Claude: [Invokes code-reviewer as requested]

What triggers auto-delegation:

- Task matches a subagent’s

description - Task would benefit from isolated context

- Task matches a subagent’s specialized expertise

Tip: Write descriptions with trigger words users would actually say. “Use for PR reviews” helps Claude match “review this PR” to your subagent.

Example Subagents#

Security Auditor (Read-Only)#

---

name: security-auditor

description: >

Audits code for security vulnerabilities. Use for security reviews,

checking auth code, or when asked about security concerns.

tools: Read, Grep, Glob

model: opus

---

# Security Auditor

You are a security specialist reviewing code for vulnerabilities.

## Check For

- Injection vulnerabilities (SQL, command, XSS)

- Authentication/authorization flaws

- Sensitive data exposure

- Insecure cryptographic practices

- Dependency vulnerabilities

## Output Format

For each finding:

- **Severity:** Critical / High / Medium / Low

- **Location:** File and line number

- **Issue:** What's wrong

- **Risk:** What could happen

- **Fix:** How to remediate

Be thorough. Security issues are often subtle.

Test Writer#

---

name: test-writer

description: >

Writes comprehensive tests for code. Use when adding test coverage,

writing unit tests, or creating integration tests.

tools: Read, Write, Edit, Bash, Glob, Grep

model: sonnet

---

# Test Writer

You write thorough, maintainable tests.

## Approach

1. Read the implementation to understand behavior

2. Identify all code paths and edge cases

3. Write tests covering:

- Happy path

- Edge cases

- Error conditions

- Boundary values

## Style

- One assertion per test when possible

- Descriptive test names that explain the scenario

- Arrange-Act-Assert structure

- No mocks unless testing external services

## Verification

Run tests after writing to confirm they pass.

Documentation Writer#

---

name: docs-writer

description: >

Writes and updates documentation. Use for README updates, API docs,

or when asked to document code.

tools: Read, Write, Edit, Glob, Grep, WebFetch

model: sonnet

---

# Documentation Writer

You write clear, useful documentation.

## Principles

- Lead with why, not what

- Include working examples

- Keep it concise — link to details instead of repeating

- Update related docs when code changes

## Structure for READMEs

1. One-sentence description

2. Quick start (copy-paste to working state)

3. Key concepts (only if not obvious)

4. API reference (if applicable)

5. Links to deeper docs

## Before Writing

Read existing docs to match style and structure.

Hooks#

Subagents can define lifecycle hooks for custom behavior:

---

name: careful-editor

description: Makes careful, verified edits

tools: Read, Write, Edit, Bash

hooks:

PreToolUse:

- path: ./hooks/confirm-edit.sh

tools: [Write, Edit]

PostToolUse:

- path: ./hooks/verify-syntax.sh

tools: [Write, Edit]

---

| Hook | When It Runs |

|---|---|

PreToolUse |

Before the subagent uses a tool |

PostToolUse |

After the subagent uses a tool |

Stop |

When the subagent tries to finish |

Sharing Across Projects#

Project subagents (.claude/agents/):

- Check into git for team sharing

- Include in code review like any other code

- Document purpose in the subagent file itself

User subagents (~/.claude/agents/):

- Personal across all your projects

- Won’t be available to teammates

Tip: Start with project subagents. Promote to user subagents only if you find yourself copying them to every project.

Subagents vs. Skills vs. Slash Commands#

| Aspect | Subagents | Skills | Slash Commands |

|---|---|---|---|

| Context | Isolated | Shared with main | Shared with main |

| Invocation | Auto or explicit | Automatic | Explicit (/command) |

| Structure | Single .md file | Directory with files | Single .md file |

| Best for | Delegated tasks needing isolation | Domain expertise | Repeatable workflows |

| Example | Code review, security audit | PDF handling | /fix-issue 1234 |

Decision guide:

- Task benefits from isolated context → Subagent

- Expertise Claude should apply in main context → Skill

- Explicit workflow you trigger → Slash Command

Tips#

- Start with read-only subagents. Reviewers and auditors are safe to experiment with.

- Use Haiku for simple tasks. Linting, formatting checks, and quick searches don’t need Opus.

- Write specific descriptions. Auto-delegation depends on Claude matching your description to the task.

- Test with explicit invocation first. “Use the code-reviewer subagent on X” confirms it works before relying on auto-delegation.

- Keep subagent prompts focused. One specialty per subagent. Create multiple subagents rather than one that does everything.

MCP & Plugins#

My book AI Agents with MCP (physical; non-affiliate) covers everything you need to know and then some about working with MCP.

MCP (Model Context Protocol) is a standardized protocol that connects Claude Code to external tools and data sources. Think of it as a universal adapter for databases, APIs, browsers, and services. Plugins are a higher-level abstraction that can bundle MCP servers, commands, agents, skills, and hooks into installable packages.

Claude Code can act as both:

- MCP Client: Connects to external MCP servers (Playwright, GitHub, Linear, etc.)

- MCP Server: Exposes Claude Code’s capabilities to other tools

Plugins vs. MCP Servers:

| Concept | What It Is | Contains |

|---|---|---|

| MCP Server | A single external service connection | Tools from that service |

| Plugin | A distributable package | MCP servers, commands, agents, skills, hooks |

Most users interact with plugins, which handle MCP configuration automatically.

Adding MCP Servers and Plugins#

MCP Servers (Direct)#

Add an MCP server directly using the CLI:

# Add to current project

claude mcp add playwright npx @playwright/mcp@latest

# Add globally (all projects)

claude mcp add playwright -s user npx @playwright/mcp@latest

# List configured servers

claude mcp list

# Remove a server

claude mcp remove playwright

Note: Refer to your particular server’s installation instructions for exact commands, if available.

Plugins (Recommended)#

Plugins bundle pre-configured MCP servers with setup instructions:

# Add a marketplace (one-time)

/plugin marketplace add anthropics/claude-plugins-official

# Browse available plugins

/plugin # Opens interactive UI

# Install a plugin

/plugin install playwright@claude-plugins-official

# View installed plugins

/plugin # Go to "Installed" tab

The official Anthropic marketplace (claude-plugins-official) is automatically available.

Configuration Locations#

| Location | Scope | Use For |

|---|---|---|

.mcp.json in project root |

Project (check into git) | Team-shared MCP servers |

.claude/settings.json |

Project | Plugin and permission settings |

~/.claude.json |

User (all projects) | Personal MCP servers |

~/.claude/settings.json |

User | Global plugin settings |

Example .mcp.json (shareable with team):

{

"mcpServers": {

"playwright": {

"command": "npx",

"args": ["@playwright/mcp@latest"]

},

"github": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-github"],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": "${GITHUB_TOKEN}"

}

}

}

}

Check .mcp.json into git so teammates get the same tool access.

Caution: Tool Collision and Context Cost#

Tool Collision#

Multiple MCP servers can expose similarly-named tools, causing confusion:

- GitHub MCP and

ghCLI both handle issues/PRs - Multiple LSP servers for the same language

Fix: Disable or remove overlapping servers. Use /mcp to see all active tools.

Context Cost#

Each MCP server consumes context. Too many servers = wasted tokens.

/context # Check context usage

Best practice: Install only what you actively use. Disable servers for projects that don’t need them.

Security#

Plugins and MCP servers execute code on your machine.

- Review before installing: Check the source repository

- Prefer official marketplaces: Anthropic-maintained plugins are vetted

- Be cautious with env vars: Tokens in

.mcp.jsonmay be committed accidentally

Useful Plugins and MCP Servers#

Language Server Protocol (LSP)#

Give Claude real-time code intelligence: type checking, go-to-definition, find references.

# Add the community LSP marketplace

/plugin marketplace add boostvolt/claude-code-lsps

# Install for your language

/plugin install pyright@claude-code-lsps # Python

/plugin install vtsls@claude-code-lsps # TypeScript

/plugin install rust-analyzer@claude-code-lsps # Rust

/plugin install gopls@claude-code-lsps # Go

Requirements: The underlying language server binary must be in your PATH:

pip install pyright # Python

npm install -g @vtsls/language-server # TypeScript

rustup component add rust-analyzer # Rust

go install golang.org/x/tools/gopls@latest # Go

Note: LSP support is maturing. As of v2.1.0+, most issues are resolved, but check the boostvolt/claude-code-lsps repo for current status.

Browser Automation: Playwright#

Automate browser testing and visual verification.

# Via MCP directly

claude mcp add playwright npx @playwright/mcp@latest

# Or via plugin

/plugin marketplace add fcakyon/claude-codex-settings

/plugin install playwright-tools@claude-settings

Use cases:

- Visual iteration: screenshot → compare → adjust

- E2E test generation

- Form filling and UI testing

- Debugging frontend issues with real browser

Project Management: Linear#

Create issues, manage projects, track work.

/plugin marketplace add fcakyon/claude-codex-settings

/plugin install linear-tools@claude-settings

/linear-tools:setup # OAuth setup

Code Collaboration: GitHub#

Beyond gh CLI — deeper GitHub integration.

/plugin install github@claude-plugins-official

Or configure directly:

{

"mcpServers": {

"github": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-github"],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": "${GITHUB_TOKEN}"

}

}

}

}

Team Communication: Slack#

Search messages and channel history.

/plugin install slack-tools@claude-settings

/slack-tools:setup # OAuth setup

Hooks Made Easy: Hookify#

Create hooks without editing JSON — use markdown config files instead.

/plugin install hookify@claude-plugins-official

Then create rules conversationally:

Create a hookify rule that warns me before any rm -rf command

Hookify generates .claude/hookify.*.md files that take effect immediately.

Autonomous Loops: Ralph Wiggum#

Run Claude in a persistent loop until a task completes.

/plugin install ralph-wiggum@claude-plugins-official

Usage:

/ralph-wiggum:ralph-loop "Migrate all tests from Jest to Vitest" --max-iterations 50 --completion-promise "All tests pass"

How it works: A Stop hook intercepts Claude’s exit, re-injects the prompt, and Claude continues. Each iteration sees the modified files from previous runs.

Best for: Batch operations with clear success criteria (migrations, refactors, test coverage). Not for judgment-heavy work.

See the Multi-Agent Patterns section for more on autonomous workflows.

Code Review Agents#

Multi-agent code review with specialized passes:

/plugin install code-review-agents@claude-plugins-official

Deploys multiple agents that review from different angles: security, performance, readability, testing, each with confidence scores.

Debugging MCP Issues#

--mcp-debug Flag#

Launch Claude Code with debug output for MCP:

claude --mcp-debug

This logs MCP server startup, connection issues, and tool registration.

Common Issues#

| Symptom | Likely Cause | Fix |

|---|---|---|

| “No tools available” | Server didn’t start | Check binary is in PATH |

| Tool not appearing | Server not registered | Run claude mcp list |

| Authentication failed | Missing/invalid token | Check env vars in config |

| “Connection refused” | Server crashed on start | Check --mcp-debug output |

Checking Active Tools#

/mcp # Interactive view of all MCP servers and tools

Navigate to a specific server to see its available tools and test them.

Tips#

- Start with plugins over raw MCP. Plugins handle configuration and provide setup commands.

- Check

.mcp.jsoninto git. Team members get the same tools automatically. - Use

/contextto monitor. MCP servers consume context — disable unused ones. - One server per concern. Don’t install GitHub MCP if

ghCLI handles your needs. - Read plugin docs. Many plugins have

/plugin-name:setupcommands for OAuth or configuration.

Multi-Agent Patterns#

Why Multiple Agents?#

A single Claude session works well for focused tasks. But complex work benefits from parallelization and separation of concerns:

- Parallel execution: Multiple Claudes work on different parts simultaneously

- Context isolation: Each agent has clean context, no drift from accumulated history

- Specialization: Dedicated agents for writing, reviewing, testing

- Verification: Independent agents catch mistakes the original missed

The patterns below range from simple (git worktrees) to sophisticated (adversarial validation).

Git Worktrees for Parallel Work#

Git worktrees let you check out multiple branches from the same repo into separate directories. Each worktree is isolated — run a separate Claude session in each.

Setup#

# Create worktrees for different tasks

git worktree add ../project-feature-a feature-a

git worktree add ../project-bugfix-b bugfix-b

git worktree add ../project-refactor-c refactor-c

# Each worktree is a full working directory

cd ../project-feature-a && claude

cd ../project-bugfix-b && claude

cd ../project-refactor-c && claude

Workflow#

- Identify independent tasks — features, bugfixes, refactors that don’t overlap

- Create a worktree per task with its own branch

- Open terminal tabs for each worktree

- Run Claude in each — they work in parallel

- Cycle through to check progress, approve permissions, provide guidance

- Clean up when done:

git worktree remove ../project-feature-a

Tips#

- Use consistent naming:

../project-{task-name} - Set up terminal notifications: Claude Code can notify when it needs attention (see

/terminal-setup) - Open separate IDE windows for each worktree if reviewing code

- Don’t overlap files: If two agents edit the same file, you’ll have merge conflicts

Write → Review → Integrate Cycles#

Use separate Claude sessions for different roles, similar to human code review.

Pattern#

Session 1 (Writer):

"Implement the rate limiter for the API endpoints."

→ Claude writes code

Session 2 (Reviewer):

/clear

"Review the changes in src/ratelimit/. Check for bugs, security issues,

and missed edge cases. Don't fix anything — just document issues."

→ Claude reviews, produces feedback

Session 3 (Integrator):

/clear

"Read the code in src/ratelimit/ and the review feedback in REVIEW.md.

Address each issue, then commit."

→ Claude fixes issues based on review

Why This Works#

- Fresh context: The reviewer hasn’t seen the implementation journey, only the result

- No confirmation bias: A separate agent is more likely to spot issues

- Documented feedback: Writing issues to a file creates an artifact for the integrator

Variations#

- Writer + Tester: One agent writes code, another writes tests to verify it

- Implement + Security Audit: Implementation agent, then security-auditor subagent

- Draft + Edit: One agent drafts documentation, another edits for clarity

Headless Mode for Automation#

Headless mode (-p) runs Claude without interactive prompts — ideal for CI, scripts, and batch operations.

Basic Usage#

# Single prompt, get response

claude -p "Summarize the changes in the last 5 commits"

# JSON output for parsing

claude -p "List all TODO comments in src/" --output-format json

# Streaming JSON for real-time processing

claude -p "Analyze this log file" --output-format stream-json < error.log

CI Integration Examples#

Pre-commit hook:

#!/bin/bash

claude -p "Review staged changes for obvious bugs or security issues.

Output PASS if ok, FAIL with reasons if not." \

--output-format json | jq -e '.result == "PASS"'

PR Labeling (GitHub Action):

- name: Label PR

run: |

LABELS=$(claude -p "Analyze this PR diff and suggest labels:

bug, feature, docs, refactor, breaking-change.

Output as JSON array." --output-format json)

gh pr edit ${{ github.event.number }} --add-label "$LABELS"

Fan-Out Pattern#

Process many items in parallel by spawning multiple headless Claude calls:

# Generate a task list

claude -p "List all files needing migration from CommonJS to ESM" \

--output-format json > tasks.json

# Process each file

cat tasks.json | jq -r '.files[]' | while read file; do

claude -p "Migrate $file from CommonJS to ESM.

Return OK if successful, FAIL if not." \

--allowedTools Edit Bash

done

Pipeline Pattern#

Chain Claude into data processing pipelines:

# Analyze → Transform → Report

cat error.log \

| claude -p "Extract all unique error types" --json \

| claude -p "For each error type, suggest a fix" --json \

| claude -p "Format as a markdown report" > error-report.md

Adversarial Validation#

Use multiple models or agents to reach consensus on critical code.

The Problem#

A single model can confidently produce incorrect code. For high-stakes changes (auth, payments, data handling), independent verification catches errors.

Pattern: 2-of-3 Consensus#

# Have Claude implement

claude -p "Implement the token refresh logic per the spec in AUTH_SPEC.md"

# Review with a different model/approach

claude -p "Review the token refresh implementation.

List any security issues or spec violations." --model opus

# If needed, get a third opinion

# Use a different agent or even a different AI system

Multi-Model Orchestration#

Some teams use the multi-agent-ralph-loop pattern:

- Claude, Codex, and Gemini review critical code in parallel

- 2-of-3 must approve for the change to pass

- Disagreements trigger iteration until consensus

When to Use#

- Authentication and authorization code

- Payment processing

- Data validation and sanitization

- Security-sensitive operations

- Breaking API changes

When It’s Overkill#

- Routine refactors with good test coverage

- Documentation changes

- UI tweaks with visual verification

Ralph Wiggum: Autonomous Loops#

Ralph Wiggum is a plugin that runs Claude in a persistent loop until a task completes or iterations run out.

How It Works#

- You define a task and completion criteria

- Claude attempts the task

- When Claude tries to exit, a Stop hook intercepts

- The original prompt is re-injected

- Claude sees modified files from previous attempts and continues

- Loop until success or max iterations

Installation#

/plugin install ralph-wiggum@claude-plugins-official

Usage#

/ralph-wiggum:ralph-loop "Migrate all tests from Jest to Vitest" \

--max-iterations 50 \

--completion-promise "All tests pass"

Key Parameters#

| Parameter | Purpose |

|---|---|

--max-iterations |

Safety limit (default varies) |

--completion-promise |

String Claude must output to signal completion |

When to Use Ralph Wiggum#

| Good Fit | Why |

|---|---|

| Test migrations | Clear success: tests pass |

| Linter fixes | Clear success: no lint errors |

| Bulk refactors | Clear success: code compiles, tests pass |

| Documentation generation | Clear success: all files documented |

| Type annotation | Clear success: type checker passes |

When NOT to Use#

| Bad Fit | Why |

|---|---|

| Architecture decisions | No objective success criteria |

| UI design | Subjective quality judgment |

| Code that needs human review | Loop won’t stop for feedback |

| Exploratory work | No clear endpoint |

Cost Awareness#

Autonomous loops consume tokens continuously. Set realistic --max-iterations and monitor costs. A 50-iteration loop on a large refactor can cost $10-50+ in API usage.

Alternative: DIY Loops#

Without the plugin, you can script similar behavior:

#!/bin/bash

MAX_ITERATIONS=20

for i in $(seq 1 $MAX_ITERATIONS); do

result=$(claude -p "Continue migrating tests. Output DONE when complete." \

--output-format json)

if echo "$result" | grep -q "DONE"; then

echo "Completed in $i iterations"

exit 0

fi

done

echo "Max iterations reached"

exit 1

Choosing a Pattern#

| Situation | Pattern |

|---|---|

| Multiple independent features | Git worktrees |

| Need fresh eyes on code | Write → Review → Integrate |

| CI/CD automation | Headless mode |

| Processing many similar items | Fan-out |

| Security-critical changes | Adversarial validation |

| Batch work with clear success criteria | Ralph Wiggum |

Tips#

- Start simple. Git worktrees + multiple terminals cover most parallel needs.

- Define success criteria. Autonomous patterns require objective verification (tests, linters, type checkers).

- Monitor costs. Parallel and looping patterns multiply token usage.

- Use subagents first. Before spawning separate sessions, check if a subagent provides enough isolation.

- Set iteration limits. Always cap autonomous loops to prevent runaway costs.

- Log progress. Have agents write to files so you can track what happened across sessions.

Further Reading#

Claude Agent SDK#

For building custom agents programmatically (rather than using Claude Code interactively), Anthropic provides the Claude Agent SDK. It exposes the same primitives that power Claude Code — tools, context management, compaction — in TypeScript and Python.

Related Tools#

Tools in the broader agent ecosystem that complement Claude Code:

| Tool | Purpose | Link |

|---|---|---|

| Deciduous | Decision logging: capture and review decisions made during agent sessions | GitHub |

| Letta | Persistent agent memory based on the MemGPT paper: for long-running agents that need memory beyond context windows | letta.com |